In the last post, I discussed training data: mostly that you ought to have it or a way of getting it. If someone pitches you an idea without even a reasonable vision of what the training data would be, they’ve got a lot less credibility. In other words, if you can’t even envision training data for a given task, then the task itself may be impractical.

Next, let’s talk about evaluation with respect to application development, namely, if someone pitches an AI application idea to you:

Question: Do they have an evaluation procedure built into their application development process?

Arguably, evaluation is more important than training data. I chose to discuss training data in the first post because thinking in terms of training data gives you intuitions about what’s possible. It eliminates the infinite, but still leaves you with dreams. Evaluation is where your dreams are torn to shreds, whether or not you have training data.

Fundamentally, I want to cover three things here: why we evaluate, how do we evaluate, and how do we score the results. Understanding these three things is essential to understanding what makes a suitable evaluation; a crappy evaluation sows false confidence, something worse than no evaluation at all.

Why Evaluate

When writing software, getting stuff working in and of itself can be a challenge. In many domains of software development, a lot of the work is gluing APIs together, and getting the interfaces between those to work can be surprisingly challenging. Once the application runs, though, it’s often good to go. The error catching of the software itself plus a few sanity checks can guide the development process to completion, rooting out all but the trickiest of bugs.

Not all software is the same though. In doing AI dev work, “running” is not the same as doing anything useful. To know whether we’re doing anything useful, we have to evaluate our results—that is, systematically test whether it is working as expected.

I’ll talk about this process in detail throughout this post, but it makes more sense if we start with why we do it.

Let’s consider an example. One of the fundamental tools of natural language processing is the part-of-speech tagger—a tool that identifies nouns, verbs, adjectives, etc. in natural language text. From a mere up-and-running perspective, it’s not that hard to build a part of speech tagger that “works.” As long as it doesn’t crash, you’re good, right? Here’s a PoS tagger for Python that “works,” but mostly only in the sense that the code runs:

pos_tagger = lambda t: (t,"NN")

This “works!” An application using PoS tags could take this and execute cleanly, albeit producing output that’s crap. We must evaluate this tagger to know that the accuracy is only about 13%. In other words, unlike with most software development, merely “working” does not mean “working well.”

This is why proper evaluation is essential. Doing something more sophisticated might not come across as “wrong” so superficially. Making something that compiles and spits out results is easy; making one that is accurate is a lot harder. How exactly do we test for that?

How Evaluation is Done

So how exactly does one evaluate? At its core, evaluation is a matter of counting results that are right and wrong, but how do we decide what’s right and wrong?

Typically, this is done by comparing the right answers—usually from training data—against what the system outputs. These, however, cannot be the exact instances given to the system for training. That’s cheating—a simple look-up table could (should!) get 100% performance, in that case. To avoid this, it’s standard practice to hide some of the training data in advance and evaluate on that holdout data. Typically, this involves taking whole documents—usually, about 10% of the total—and stowing them away before adding them to the fraction of the training data that’s actually used for training.

Using holdout data is the essence of evaluation in AI applications. If someone is evaluating on the data they trained on, those results are garbage. They have not shown that they are building a system that makes useful inferences in reasonably novel circumstances, the whole reason we do this crap in the first place.

In some cases, especially unsupervised ones, holdout data isn’t an option. In this case, one can evaluate by hand: give someone the output of the system, and they decide whether it is right or wrong. Typically, this is done by at least two people to ensure that they are accurate. However, you’re most likely to get the best results from a task involving training data, so it’s often best to start there when possible, especially since evaluating by hand is essentially creating training data after the fact anyway.

Counting the Results

I mentioned earlier that evaluation is essentially just counting right and wrong results. This is fundamentally true, but oversimplifies—“right and wrong” is too simple of a paradigm.

Consider, for example, that we’re trying to get nouns, pronouns, and proper nouns out of a document for some reason. The gold standard answers are in yellow:

Trump will be the fifth person in U.S. history to become president despite losing the nationwide popular vote. He will be the first president without any prior experience in public service, while Clinton was the first woman to be the presidential nominee of a major American party.

Imagine that we score the system strictly through seeing which outputs from the system are correct: go down the list of guesses, check each if it’s right or wrong. In other words, we count false positives as wrong. This is really easy to push to 100% accuracy—all you have to do is build a system that’s really conservative in its guesses. For our example above, we could make a system that only selects “he” and “He” as answers, giving us, in blue:

Trump will be the fifth person in U.S. history to become president despite losing the nationwide popular vote. He will be the first president without any prior experience in public service, while Clinton was the first woman to be the presidential nominee of a major American party.

It didn’t do much, but under the standard described above, this is 100% accurate.

On the other hand, you could check to make sure that you got all of the tokens in your gold standard data: go down the list of gold standard tokens, check if it’s in the output data. In other words, we count false negatives as wrong. But this is also really easy to push to 100%—just guess everything:

Trump will be the fifth person in U.S. history to become president despite losing the nationwide popular vote. He will be the first president without any prior experience in public service, while Clinton was the first woman to be the presidential nominee of a major American party.

Given the second standard described, if we check this against the gold standard list, we again have 100% accuracy.

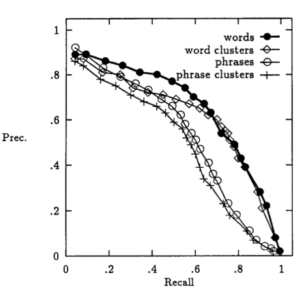

Notice though that these strawman classifiers, while they do well under the metrics they’re intended to cheat, don’t do so well on the other standard. The second classifier is only (14/47) 30% accurate on the first standard; the first classifier is only (1/14) 7.1% accurate on the second standard. The first standard we call precision; the second standard is called recall.

Often, between the two, there is a trade off. Improvements may increase recall, but lower precision in the process, or vice versa. These are often combined into an F1 score, which is a type of average that is biased toward the lower of two fractional values. Systems often push for the highest possible F1 score.

An acceptable F1 score depends on the application. There is no absolute number, though an F1 around 80% is typical for a useful system in many applications.

Parameters, Baselines, and Practice

I mentioned above that sometimes there’s a trade-off between precision and recall. Often these trade-offs occur through tuning parameters—for example, lowering the threshold to call something a match—or adding new features, thereby allowing more results to be considered matches. Sometimes, these new features and tweaks will make things perform worse; sometimes they make things better. As Levy, Goldberg, and Dagan (2015) showed, they can make or break sophisticated deep learning algorithms. As you build such an application, parameters you’ve never considered are unveiled to you, for which you have to make on the fly decisions, only to come back later and tweak.

One of the frightening truths of AI: the often simple, stupid thing you hack together in the first week of development can outperform a very sophisticated system, for quite a long time. There are a lot of reasons for this:

- Simple things often work remarkably well.

- Simple things have few parameters to adjust, so you can easily optimize them automatically or by hand.

- Simple things are fast, so you can experiment quickly within a larger space of parameter choices.

This simple, stupid solution is called a baseline. Most NLP and AI papers working on a new problem have some dead simple baseline, and if they’re working in a previously pioneered problem, the baseline is typically the previous state of the art approach to a problem.

In either case, the baseline gives the evaluation scores some context to be compared to. Numbers alone are meaningless; it is context that breathes life into them.

For example, if someone is working on a system and claims they have 99% accuracy, does a dead simple baseline give 98.9% accuracy? That system isn’t much of an improvement. Baseline at 99.5%? Then the proposed system is actually hurting.

If on a problem you’re working on, you can’t beat the baseline, it’s time to go back to the drawing board—or, just deploy the baseline as the state-of-the-art AI in your application. Beating the baseline is an academic necessity, but not a business one. In fact, the baseline might be better than anything else out there at your specific problem. Simplicity can produce beautiful solutions—just don’t believe someone who sells it like they’ve cranked out some kind of machine god. They haven’t, and that kind of hype is bad for everyone involved in this sort of work.

tl;dr: Evaluation is a systematic way of verifying that an AI system works. Without a proper evaluation, an AI application can easily be garbage. Proper evaluation typically involves holdout data, precision and recall, and a baseline for comparison.

If an evaluation meets these criteria, it tells you how well the system works and gives you some clue as to how well it will work, but only in comparable circumstances. For example, if you want to do something with news, and you train your system on news, you can expect results like your evaluation process told you. But if you train on news and want to process forum posts, chat logs, or medical dictation, you’re probably not going to get comparable results.

In this post and the prior, I talked about fundamentals: the kind of stuff they teach in AI, ML, and NLP courses. In the following posts, (likely, much shorter!) I’ll be discussing questions that are a bit more specific to applications rather than general to AI and NLP: namely, how do you know that proposed ideas are realistic given the state of the art in NLP and what’s known about language and knowledge? How do those act as limitations on what’s possible using NLP, and how can we get around those limitations for the time being?